Sifts

Caution

This is a fully experimental project currently in its initial stages. Its effectiveness and results are still under evaluation.

Caution

For this experiment, we do not use any real client data. All testing data is either artificially generated by our developers or extracted from open-source projects to ensure privacy and security.

Introduction

Sifts is a security analysis tool that leverages large language models (LLMs) to detect vulnerabilities in client code. The project utilizes a knowledge base built from vulnerabilities reported by our penetration testers. By referencing these known vulnerabilities, the algorithm analyzes previously unflagged code to identify similar security risks. This approach enhances the efficiency of vulnerability detection, ensuring a proactive approach to securing software systems.

Public Oath

At Fluid Attacks, we are committed to enhancing code security through AI-driven vulnerability detection. We pledge to continuously refine our detection algorithms, expand our knowledge base with real-world vulnerabilities, and ensure our analysis remains accurate and actionable.

Sifts will always adhere to the following principles:

- Utilize AI ethically and responsibly for security analysis

- Maintain a continuously updated knowledge base of reported vulnerabilities

- Ensure transparency and accuracy in vulnerability detection

- Prioritize the security and privacy of client data, ensuring that the agreements with our LLM model providers explicitly state that they do not store, use, or retain any data we send them

Architecture

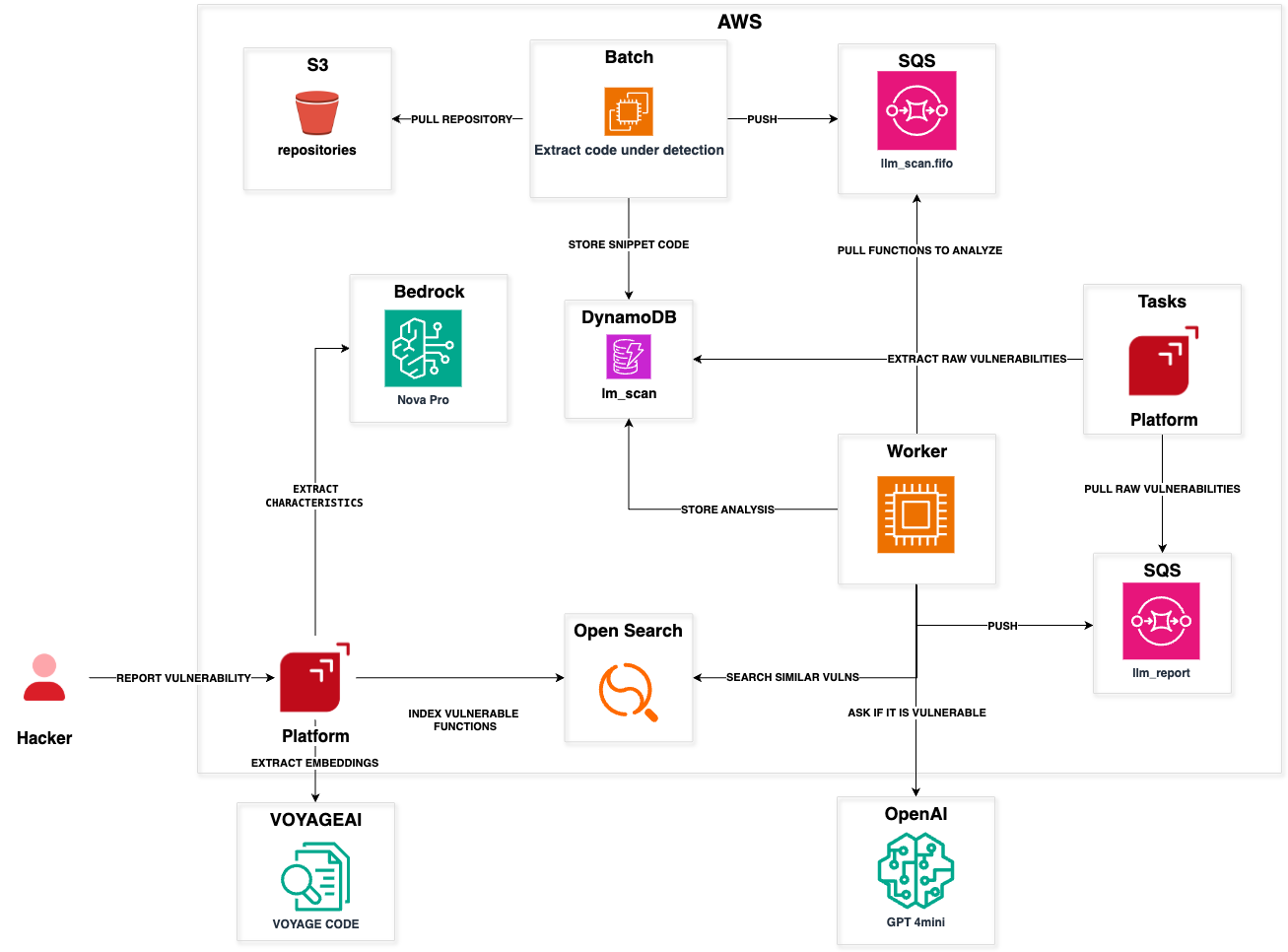

This process is divided into multiple phases: the knowledge base feeding phase and the function evaluation phase.

Knowledge Base construction

- A penetration tester reports a vulnerability to the Fluid Attacks platform.

- The platform extracts the complete function containing the vulnerable line of code.

- An LLM is queried to extract:

- Code characteristics: Syntax and structure.

- Semantic characteristics: Purpose and logic flow.

- Functional characteristics: Expected behavior and operations performed.

- An embedding of the code is generated using VoyageAI, converting the function into a vector representation that captures its behavior.

- These three elements (semantic data, functional data, and embeddings) are stored in Amazon OpenSearch, ensuring they can be efficiently retrieved for future comparisons.

Code evaluation

- A client repository is scanned for all supported programming languages.

- The repository files are parsed using Tree-sitter, identifying all function nodes in the codebase.

- Extracted functions are stored in an intermediate table for later processing.

- Once stored in DynamoDB, a message is sent to an SQS queue (

llm_scan.fifo) containing the function ID. - A worker continuously listens to

llm_scan.fifo, waiting for function analysis requests.

Vulnerability analysis

- Function analysis:

- The function’s embedding is generated using VoyageAI, producing a vector that represents its behavioral traits.

- Semantic and functional characteristics are extracted using AWS Nova Pro to provide deeper context.

- Similarity search:

- A query is executed in AWS OpenSearch to retrieve the 10 most similar vulnerabilities reported in the past.

- Patch generation:

- For each of the 10 similar vulnerabilities, an AI-driven patch is generated to provide a potential fix.

- If the function under evaluation remains unpatched, the suggested fix is documented.

- Validation with OpenAI:

- An OpenAI model is used to verify whether the function contains a vulnerability similar to the retrieved cases.

- Additionally, it checks if the function has applied a patch similar to the suggested fixes.

- Vulnerability reporting:

- If the function is deemed vulnerable, the issue is stored in DynamoDB with essential metadata.

- Simultaneously, a message is sent to an SQS queue (

llm_report) containing the function’s analysis result. - Final reporting:

- A separate worker listens to

llm_report, processes the vulnerability data, and submits the findings to the Fluid Attacks platform for client review.

Error handling with SQS and workers

- Resilience mechanisms:

- SQS queues and workers provide fault tolerance by handling potential failures in message processing.

- If a worker fails to process a message, SQS automatically retries it, ensuring robustness.

- Network error mitigation:

- By utilizing asynchronous message processing, network failures do not halt operations.

- Messages persist in the queue until successfully processed, preventing data loss.

Data security and privacy

OpenAI

OpenAI protects the privacy of enterprise customer data by ensuring API-processed content is not used for training models. Data use is governed by specific contracts with customers. (OpenAI Privacy Policy)

Currently, the servers used by OpenAI are located in the United States; however, they have plans for global expansion in the future. (Infrastructure).

Security and Compliance

- OpenAI’s physical security is managed by Azure, and Azure’s facilities follow the guidelines of its corporate security program, established policies, and procedures. (Physical Security)

- The data retention period for data transmitted through the API may vary depending on certain conditions. For the specific use case of LLM Scanner, the retention period is 30 days. After this period, the data will be deleted unless exceptional legal reasons prevent its removal. (How we use your data)

- The data sent to OpenAI is encrypted at rest with AES-256 and in transit using TLS 1.2, both between OpenAI and customers, and between OpenAI and service providers.

- OpenAI holds compliance certifications for CCPA, CSA STAR, GDPR, SOC 2, SOC 3, and TX-RAMP.

Amazon Bedrock

Amazon Bedrock ensures customer data privacy by using private model copies, not sharing or using data for model improvement, encrypting data, and providing secure connectivity options. (AWS Bedrock Security)

Voyage AI

Voyage AI, by default, utilizes customer data for training and improving AI models. However, Fluid Attacks explicitly opts out of this default setting, ensuring that client data provided to Voyage AI is used solely for generating embeddings and is not leveraged for any model training or improvement purposes. (Voyage AI Privacy Policy)

Some additional points to consider:

- Voyage AI hosts its infrastructure in the USA.

- Since Fluid Attacks opted out of data collection, the data is not stored in Voyage AI’s servers (zero storage time).

- Data transmitted to Voyage AI is encrypted in transit with SSL in all its APIs.

- Voyage AI has GDPR, SOC 2, and HIPAA compliance certifications.

To do

- Consider using only AWS services if client concerns arise over external model providers.

- Evaluate deploying VoyageAI’s voyage-code3 model or AWS embedding models on AWS EC2.

- Explore deploying an open-source LLM model on AWS EC2 with On-Demand or Spot instances to address client privacy concerns.

Learn more

Tip

Have an idea to simplify our architecture or noticed docs that could use some love? Don't hesitate to open an issue or submit improvements.