Compute

Compute is the component of Runs in charge of providing out-of-band processing. It can run jobs both on demand and on schedule.

Public Oath

Fluid Attacks will constantly look for out-of-band computing solutions that balance:

- Cost

- Security

- Scalability

- Speed

- Traceability

Such solutions must also be:

- Cloud based

- Integrable with the rest of our stack

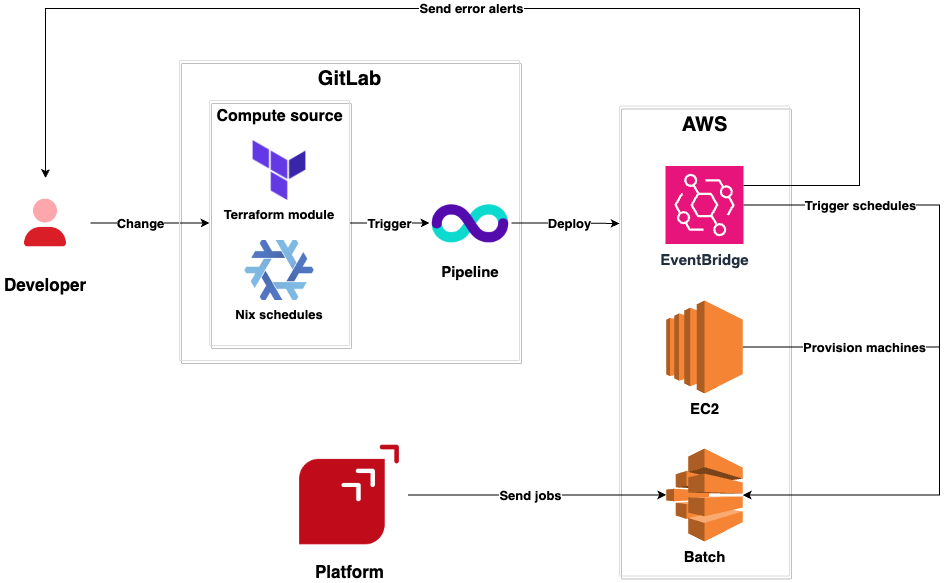

Architecture

- The module is managed as code using Terraform.

- Batch jobs use AWS EC2 Spot machines.

- Spot machines have Internet access.

- Spot machines are of

aarch64-linuxarchitecture. - Batch jobs can run jobs, but for as long as an EC2 SPOT instance lasts (so design with idempotency and retrial mechanisms in mind).

- Jobs can be sent to a batch in two ways:

- Using curl, boto3, or any other tool that allows interacting with the WS API.

- Defining a scheduler that periodically submits a job to a queue.

- AWS EventBridge is used to trigger scheduled jobs.

- On failure, an email is sent to development@fluidattacks.com.

- Batch machines come in two sizes:

smallwith 1 vCPU and 8 GiB memory.largewith 2 vCPU and 16 GiB memory.- All runners have internal solid-state drives for maximum performance.

- A special compute environment called

warpmeant for cloning repositories via Cloudflare WARP uses 2 vCPU and 4 GiB memory machines on anx86_64-linuxarchitecture. - Compute environments use subnets in all availability zones within

us-east-1for maximum spot availability.

Contributing

Please read the contributing page first.

General

- You can access the Batch console after authenticating to AWS via Okta.

- If a scheduled job takes longer than six hours, it should generally run in Batch; otherwise, you can use the CI.

Schedulers

Schedulers are a powerful way to run tasks periodically.

You can find all schedulers in the GitLab project.

Creating a new scheduler

We highly advise you to take a look at the currently existing schedulers to get an idea of what is required.

Some special considerations are:

- The

scheduleExpressionoption follows the AWS schedule expression syntax.

Testing the schedulers

Schedulers are tested by two jobs:

runs-compute-core schedule-testGrants that- all schedulers comply with a given schema;

- all schedulers have at least one maintainer with access to the universe repository,

- every schedule is reviewed by a maintainer every month.

runs-compute-infra applyTest the infrastructure that will be deployed when new schedulers are created.

Deploying schedulers to production

Once a scheduler reaches production, the required infrastructure for running it is created.

Technical details can be found in our GitLab project.

Local reproducibility in schedulers

Once a new schedule is declared, it can be submitted to AWS Batch using the Compute Core CLI, as follows:

runs-compute-core schedule-job <schedule_name>. Before submitting the job, you need to assume a role with sufficient permissions using direnv allow, i.e., prod_integrates.Bear in mind that

runs/compute/schedule/default.nix becomes the single source of truth regarding schedules. Everything is defined there, albeit with a few exceptions.Testing compute environments

Testing compute environments is hard for multiple reasons:

- Environments use init data that is critical for properly provisioning machines.

- Environments require AWS AMIs that are especially optimized for ECS.

- When upgrading an AMI, many things within the machines change, including the

cloud-init(the software that initializes the machine using the init data provided) version, GLIBC version, among many others. - There is no comfortable way to test this locally or in CI, which forces us to rely on productive test environments.

Below is a step-by-step guide to testing environments.

Caution

We do this to make sure that production environments will work properly with a given change, which means that parity between test and production environments should be as high as possible.

- Change the

testenvironment with whatever changes you want to test. direnvto the AWSprod_commonrole.- Export

CACHIX_AUTH_TOKENon your environment. You can find this variable in GitLab’s CI/CD variables. If you do not have access to this, ask a maintainer. - Deploy changes made to the environment you want to test with

runs-compute-infra apply. - Queue compute test jobs with:

- Review that jobs are running properly on the

testenvironment. - Extend your changes to the production environments.

Caution

Keep in mind that other deployments to production can overwrite your local deployment. You can avoid this by re-running the command and never saying

yes to the prompt.runs-compute-core schedule-job runs_compute_test_environment_defaultor

runs-compute-core schedule-job runs_compute_test_environment_warp

Tip

The tests executed by these compute jobs are located in runs/compute/test/environment; feel free to modify them as you see fit.

More about Runs

Tip

Have an idea to simplify our architecture or noticed docs that could use some love? Don't hesitate to open an issue or submit improvements.